Spatio-Temporal Handwriting Imitation

Contribution

To the best of our knowledge, it is the first paper that synthesizes realistic Western cursive handwriting by mimicking the actual temporal writing process. Our method works nearly fully automatic, e.g. no manual cutting out of characters, and is able to render full lines instead of glyphs/words as in other works.

Possible use cases we envisioned

- Enable physically impaired persons to write in their personal handwriting,

- Send personal greeting notes in your handwriting without writing it on a paper and scanning it.

- Personalize game/virtual environment experience by using your (or a famous person’s) handwriting.

- Generate handwriting to train modern text recognition engines, which commonly need a lot of training samples.

- Enable forensic researchers to generate and imitate handwriting without the need of a person. Thus, create more robust writer identification methods.

Limitations

- At its current stage the generation works quite robust but has problems with artifacts, missing punctuation marks and synthesizing writers w. bad handwriting.

- The imitation of the general writing style works well, as well as the pen imitation. However the stroke imitation is still limited (this can mainly be attributed to the method of A. Graves which we used for this part, s. below). Thus, most people and esp. forensic experts will notice the difference to the original.

- Currently the system has to be trained with about 30 lines of the subject to be imitated.

- Currently the background isn’t simulated.

Overview of our current proposed system

Authors: Martin Mayr, Martin Stumpf, Anguelos Nicolaou, Mathias Seuret, Andreas Maier, Vincent Christlein

Preprint: https://arxiv.org/abs/2003.10593

Code: https://github.com/M4rt1nM4yr/spatio-temporal_handwriting_imitation

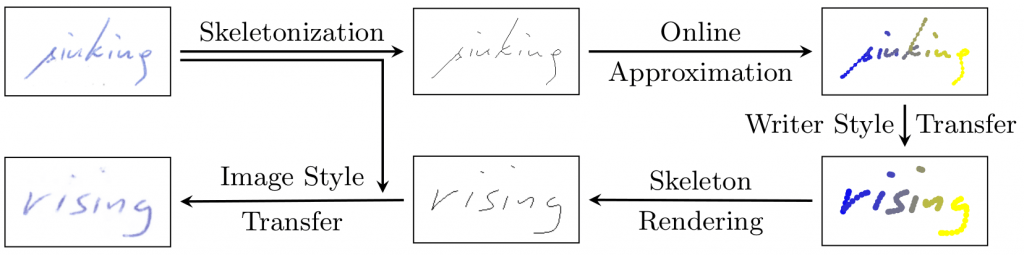

From an offline sequence, we create a temporal one, which is used for a stroke style modification (top path). Eventually, we transfer it back to the offline domain adapted to the image style, i.e. pen style, color (bottom path).

Offline to online conversion

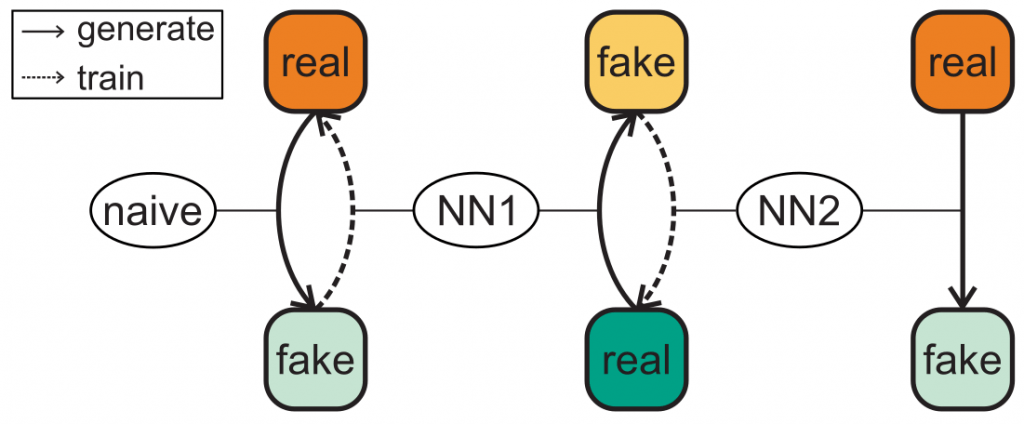

First, we need to create a robust skeleton. To be able to train the system with online data, we developed an iterative knowledge transfer which can be seen as an unrolled CycleGAN. This avoids cycle-consistency that would allow a stroke modification. It uses a naive mapping to generate a more general mapping of offline handwriting to a handwriting skeleton. We show that this is more robust than a classical skeletonization.

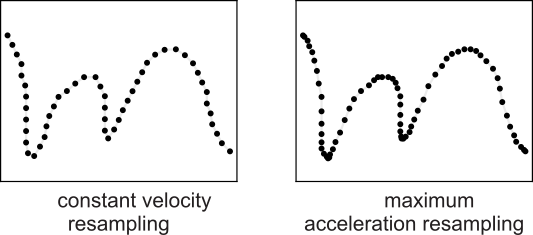

The second step is to generate the stroke sequence. We were unable to train the system with constant resampling, thus we propose ‘maximum acceleration resampling’ which samples points on straight lines in a sparse manner.

Content transfer

Here we made use of the seminal work by Alex Graves “Generating Sequences With Recurrent Neural Networks” https://arxiv.org/abs/1308.0850

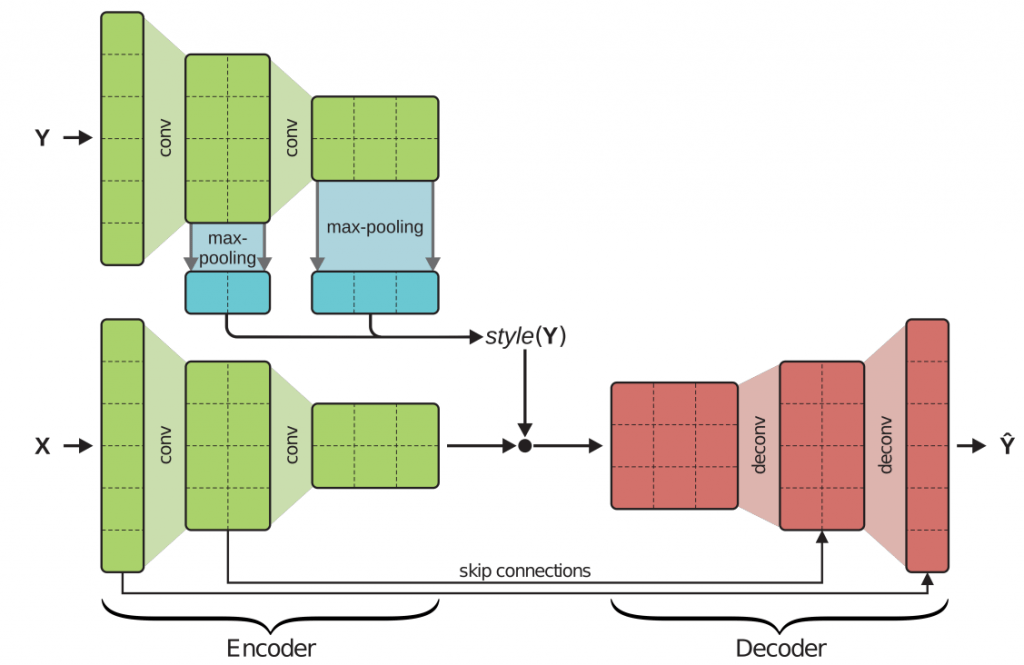

Pen style transfer

For the image/pen style transfer, we modify the well-known pix2pix framework to incorporate the style information.

Results

We created a user study, which is online below https://forms.gle/MGCPk5UkxnR23FqT9 . We would like to encourage everyone to still participate so that we get more data to draw statistics.

Main results:

1. In a Turing test, the people truly assigned our faked samples as such by only 50.3% (67.7% real samples as real samples).

2. Also a writer identification system assigned a faked paragraph by 25% as being written by the person to be imitated.